So many third-party analytics, so little time

When it comes to content on the web, be it a video, a webpage or a smartphone app, speed matters. Recent research has shown that 89 percent of consumers are likely to recommend a brand after a good experience on a mobile device, while those who have a negative experience are 62 percent less likely to purchase again from that brand. A three-second delay leads to over 50 percent of customers abandoning the site.

When it comes to content on the web, be it a video, a webpage or a smartphone app, speed matters. Recent research has shown that 89 percent of consumers are likely to recommend a brand after a good experience on a mobile device, while those who have a negative experience are 62 percent less likely to purchase again from that brand. A three-second delay leads to over 50 percent of customers abandoning the site.

Eliminating Delays to Deliver Content Fast

Tools like AT&T’s Video Optimizer can help you test your mobile videos and apps and check whether they transfer data efficiently – helping you create a fast user experience in your services.

Once your service is ‘in the wild’ with customers, it is important to monitor how your customer base is using your application. There are dozens (if not hundreds) of analytics tools that provide real user measurements (RUM) on crashes, performance, video streaming, and more. This data is an important part of discovering how your application is used, and where your customers are finding issues.

I have always seen calls to third-party RUM providers (and, again, I think this is awesome!) when I test apps with Video Optimizer. Sometimes, I see applications that appear to have taken the idea that “if one set of RUM data is good, using six tools will give us even more insight!”

This is not always the case.

In Quantum Physics, Observations Affect Outcomes

The theoretical physicist Werner Heisenberg is famous for his Uncertainty Principle. It states that the more accurately you measure one property of a system, other measurements become less precise. Known outside of quantum physics as the ‘observer effect’ it basically means that the more you study and perturb a system – the more you actually change the system and affect the outcomes.

Can Using RUM Affect My Mobile Experience?

Generally, the answer is no. The creators of RUM analytics tools have done a great job to ensure that the data they collect is sent in a way that does not block or slow delivery of content to the end user. However, there are rare occurrences where a multitude of analytics calls have blocked application rendering, and here I will present one example:

Mobile Video Delayed by RUM

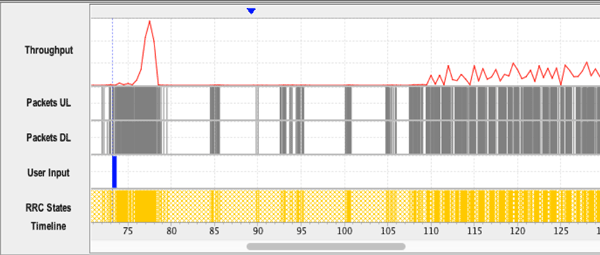

In the below example screenshot from Video Optimizer, we are looking at the packets and information from a trace where a movie is being selected, downloaded, and played. At ~73.2 seconds, a movie was selected from a list (the blue bar in the User Input row notes that the screen rotated from portrait to landscape to begin playing the movie):

As the screen rotates, the density of packets transmitted (Packets UP and Packets DL rows) vastly increases to an almost solid bar of gray lines. This might lead you to believe that the video has begun downloading for playback. Look at the top row at the throughput. When video is being downloaded, you would expect that A LOT of data is being transmitted, and that does not happen until after 75 seconds. So, what is happening in the two seconds before the video starts downloading?

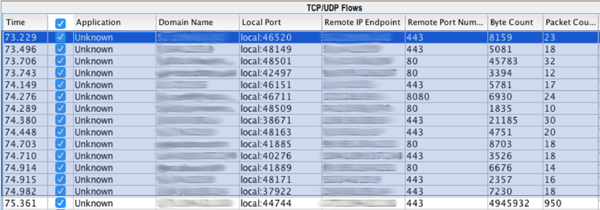

Let’s take a look at the connections taking place. The top row (in dark blue) corresponds to the time the screen rotates. There are then 14 connections established to various servers highlighted in light blue before the video begins downloading at 75.361s.

Let’s take a look at the connections taking place. The top row (in dark blue) corresponds to the time the screen rotates. There are then 14 connections established to various servers highlighted in light blue before the video begins downloading at 75.361s.

The last connection is clearly the connection with the video file, as it is the only connection in the table with a large amount of data transferred (in the byte count column you can see a transfer of ~4.9 MB). Many of the 14 connections that begin prior to the video streaming belong to video RUM measurement providers (I have intentionally obfuscated the domain names and IPs as the issue is NOT the RUM providers, just the quantity of RUM providers in use). With so many different providers initializing their services the video is actually delayed nearly two seconds before it can begin to download. Playback starts about a second after the download begins. Normally, a one-second playback is good, but when the two seconds of additional delay is added, we have a performance issue.

Network Conditions Accentuate the Effect

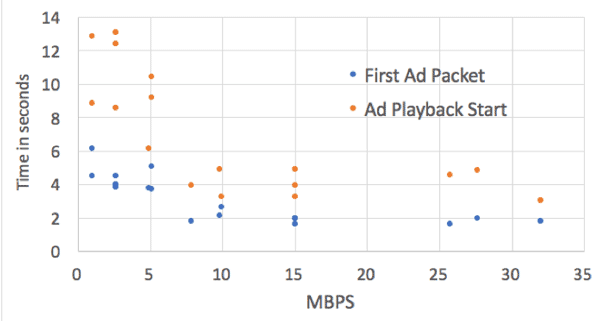

We can all agree that a two- to three-second delay after selecting a movie is a poor experience. But the data I am showing above is on a fast mobile network. What happens if we turn down the knob, and repeat these experiments on a slower network? The network attenuation feature in Video Optimizer allows us to run the same test, but in different network conditions.

What we find is that if the network throughput is under 5MBPS, the connection delay nearly doubles to four seconds, and the video playback time jumps to over five seconds (and sometimes over 10 seconds!).

What we find is that if the network throughput is under 5MBPS, the connection delay nearly doubles to four seconds, and the video playback time jumps to over five seconds (and sometimes over 10 seconds!).

Test your app. Use RUM tools to measure how your customers interact with your application. When choosing the data you collect, also realize that as you add more third-party connections to measure – the measurements can actually impact the performance of your service.