AT&T Video Optimizer

Opening Connections

Introduction

Many applications handle the opening of connections inefficiently.

A typical application startup consists of an initial TCP burst, followed by a series of bursts spread out over time. Unfortunately, this approach can dramatically slow down the application’s response time and waste energy on the device, because every time an application causes a new packet burst, it increases latency across the board.

A better approach is to download as much content as quickly as possible when opening a connection.

This Best Practice Deep Dive gives some background on what happens when a TCP connection is opened. It explains how opening connections inefficiently can especially impact a wireless application, and it offers recommendations on how to open connections more efficiently.

Background

Transmission Control Protocol (TCP) is the protocol used with the Internet Protocol (IP) to send data over the Internet. The two together are known as TCP/IP.

IP handles the delivery of data; TCP handles the individual pieces of data which are called packets or segments. When in route, packets are broken up for efficient routing through the Internet, then brought back together and assembled in order when they reach their destination.

TCP is known as a connection-oriented protocol, which means that a connection is established and maintained by the application programs on both sides of the transmission. A three-way handshake between two parties is the standard process used to open a TCP connection. This is managed through the TCP Header.

A TCP Header contains 9 one bit Flags (aka Control bits):

- NS (1 bit) – ECN-nonce concealment protection.

- CWR (1 bit) – The Congestion Window Reduced (CWR) flag is set by the sending host to indicate that it has received a TCP segment with the ECE flag set and has responded in the congestion control mechanism.

- ECE (1 bit) – ECN-Echo indicates:

- If the SYN flag is set (1), that the TCP peer is ECN capable.

- If the SYN flag is clear (0), that a packet with the Congestion Experienced flag set in the IP header is received during normal transmission.

- URG (1 bit) – Indicates that the Urgent pointer field is significant.

- ACK (1 bit) – Indicates acknowledgment. All packets after the initial SYN packet sent by the client should have this flag set.

- PSH (1 bit) – Push function. Asks to push the buffered data to the receiving application.

- RST (1 bit) – Reset the connection.

- SYN (1 bit) – Synchronize sequence numbers. Only the first packet sent from each end should have this flag set. Some other flags change meaning based on this flag, and some are only valid for when it is set, and others when it is clear.

- FIN (1 bit) – No more data from sender.

Opening a TCP connection normally begins when one side initiates a TCP request which is responded to by the other side. The process also works if the two sides initiate the procedure simultaneously. When a simultaneous attempt occurs, each side receives a SYN segment which carries no acknowledgment after it has sent a SYN. Arrival of an old duplicate SYN segment can potentially make it appear, to the recipient, that a simultaneous connection initiation is in progress. Proper use of reset segments can disambiguate these cases.

The purpose of a three-way handshake is to avoid previous old connection initiations from causing confusion. To prevent this, RST (reset) was devised. If the receiving side is in a non-synchronized state, for example, SYN-SENT, SYN-RECEIVED, it returns to LISTEN upon encountering an acceptable reset.

Once the connection is established, data can begin to be passed between the two sides of the transmission.

The Issue

On the wired web, opening connections is not as big of an issue because network bandwidth is broad enough to smooth out inefficiency. The wireless environment, with constrained radio networks and greater latency, however, requires efficiencies to be found wherever possible, including the opening of connections between a server and a mobile device.

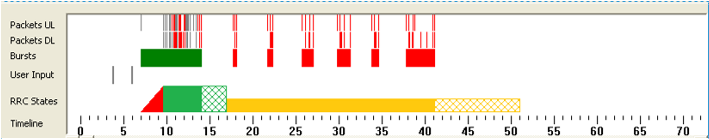

The following chart (figure 1) shows trace results from a typical application startup that illustrates the issue.

These trace results were captured using the AT&T Video Optimizer diagnostic tool. The Video Optimizer tool collects trace data from an application, evaluates it against recommended best practices, and generates analytical results.

Figure 1: Trace results from an application startup.

The data in figure 1 (taken from the Trace chart in the Diagnostics tab of the Video Optimizer tool) shows, among other things, the bursts of data as an application starts up. In the “Bursts” row in this chart, the burst (in green) is triggered by user input, while the bursts (in red) are initiated by the application. The white spaces between the bursts indicate time in which no data transfer is taking place.

By spreading out the application initiated bursts (in red) with periods in which no data is transferred (white spaces), the application is opening connections inefficiently.

Best Practice Recommendation

The Best Practice Recommendation is to open network connections efficiently. You can do this by following some simple practices:

- Group TCP packets closely together when opening the connection.

- Download user requests for content as quickly as possible.

- Prefetch content. Prefetching refers to downloading any content that you anticipate your users will request next. For best practice recommendations about prefetching, see Deep Dive: Content Prefetching.

- When using periodic connections, set the timing between transfers as long as reasonably possible. For best practice recommendations about managing periodic connections, see Deep Dive: Periodic Transfers.

- Piggyback your analytics with other data. This will reduce the number of standalone pings.

Following these practices will lead to faster launches, reduced energy consumption, reduced latency in the network, and happier users.

Figure 2 shows the trace results from a typical application startup taken from the Diagnostics tab of the Video Optimizer tool as in figure 1. The “Bursts” row in this chart shows the burst triggered by user input (in green), the bursts initiated by the application (in red), and the white spaces between the bursts in which no data transfer is taking place.

Figure 2: Trace results from an application startup with suggestion for grouping bursts.

If all of the application initiated bursts (in red) in this example were grouped into as tight a bunch as possible, and the amount of time in which no data is being transferred (the white spaces) were removed, the same amount of content could be downloaded in as little as 17 seconds, instead of 40 seconds - a real improvement in the response time of the application.

In addition to response time, another reason to group requests is the efficient use of radio resources. Several of the requests in this example turn the radio on just for that segment. A better solution from the perspective of radio resources would be to group the requests together, so that the radio would be turned on once. This would improve performance and reduce energy waste.