AT&T Video Optimizer

Multiple Simultaneous TCP Connections

Introduction

It is common for applications on the wired-web to open multiple persistent Transport Control Protocol (TCP) connections so that different content can be served simultaneously from the same server.

Persistent connections allow the same TCP connection to send and receive multiple HTTP requests/responses instead of opening a new TCP connection for every request/response pair. When an application opens fewer TCP connections and keeps them open for a longer period, it causes less network traffic, uses less time establishing new connections, and allows the TCP protocol to work more efficiently.

Because of these advantages and others, many mobile developers have followed the same practice of opening multiple persistent connections in their wireless apps without being aware that latency caused by opening multiple connections is more of an issue on wireless networks than wired ones.

If a wireless app opens too many simultaneous connections, performance will begin to decrease more quickly than it will in a wired app. As a result, the wireless app will appear less responsive, and energy will be wasted from the radio being turned on and off.

This Best Practice Deep Dive looks at how TCP connections are established to see why persistent connections are more efficient. It examines the issue of latency when multiple persistent connections are opened on a wireless network, and offers recommendations for smarter connection management in mobile apps to deal with this issue.

Background

By examining how TCP connections are established between a client and server, we can see why persistent connections are a more efficient connection strategy.

The TCP protocol is responsible for ensuring that a message is divided into the packets (or segments) that IP manages and for reassembling them back into the complete message at the other end. In the OSI communication model, TCP is in layer 4, the Transport Layer.

A TCP connection is established with a three-way handshake, so several additional round trip times (RTT) are needed for TCP to achieve appropriate transmission speed. The three-way handshake is also known as SYN-SYN-ACK, which is short for SYN, SYN-ACK, and ACK.

- SYN: A SYN packet segment is used to initiate the three-way handshaking process in TCP. When one node wants to send data to another using TCP it does this by sending a SYN segment. SYN stands for Synchronization packet (or segment).

- SYN-ACK: In response, the server replies with a SYN-ACK. This stands for Synchronization-Acknowledgment segment.

- ACK: Finally, the client sends an ACK back to the server. This stands for Acknowledgment segment.

After this three-way handshake is completed, both the client and server have received an acknowledgment and the connection is established.

Because this process is required each time a TCP connection is established, it causes user-perceived latency. This is why persistent connections that use the same TCP connection to send and receive multiple HTTP requests and responses instead of opening a new connection for each, were proposed and became the default behavior in Hyper-Text Transport Protocol (HTTP) 1.1.

By keeping open and reusing TCP connections to transmit sequences of messages, persistent connections reduce the number of connection establishments and the resulting latency and processing overhead.

The Issue

The bandwidth of a wireless network is more physically constrained in size and scope than a wired network. With less overall bandwidth to work with, the number and size of connections that are using that bandwidth must be managed more carefully because they have a greater impact on performance. This is why latency is more of an issue or wireless networks than on wired ones.

If an app opens too many simultaneous TCP connections, the size of each connection will be smaller. This means that throughput will be limited, performance will decrease, and energy will be wasted.

The following example demonstrates this issue using trace data captured from an application using the AT&TVideo Optimizer diagnostic tool. The Video Optimizer tool collects trace data from an application, evaluates it against recommended best practices, and generates analytical results.

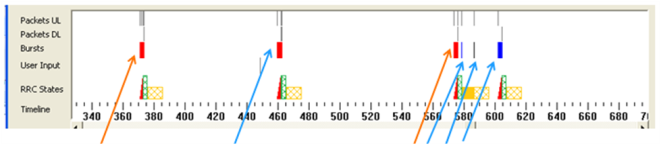

The following image (figure 1) is taken from the Diagnostics chart in the Video Optimizer tool and it shows some results from the Unnecessary Connections—Multiple Simultaneous Connections test.

Figure 1: Video Optimizer Diagnostics Chart showing results from the Unnecessary Connections—Multiple Simultaneous Connections test.

Note the following about figure 1: The Orange Arrows point to periodic pings approximately 200 seconds apart. The Blue Arrows point to a request to an analytics provider. (The 3 bursts between 580-610 seconds are the delayed closing of connections.)

In this example (figure 1), there are separate connections for keepalive requests (the "periodic pings" indicated by the orange arrows), and analytics requests (indicated by the blue arrows). Some of the blue arrows indicate a delay in the closing of some connections. This is the subject of another Best Practice topic Closing Connections, and is another area tested by the Video Optimizer tool.

The results displayed in figure 1 show that it would have been more efficient to bundle a keepalive request and an analytics requests into one connection. For instance, if the second analytics connection was combined with the keepalive connection at the 580 second mark (and if all the connections were closed promptly), there would be an energy savings of 17 joules.

We've seen from this example that the issue of perceived latency from too many simultaneous TCP connections can be mitigated through smart connection management. The following Best Practice Recommendation section describes some strategies for achieving this.

Best Practice Recommendation

The Best Practice Recommendation is to manage the TCP connections in your application more efficiently.

Two techniques for doing this are:

- TCP persistent connections

- HTTP Pipelining

TCP Persistent Connections

Whenever possible, it is good practice to group requests together in order to improve performance, save energy and reduce bandwidth. Since a single request for more data is likely to provide a better user experience than several smaller requests, bundling, or batching up multiple requests at the application level is recommended. This can be done with persistent connections.

Persistent connections (also called keepalives or connection reuse) are the technique of using the same TCP connection to send and receive multiple HTTP requests/responses, as opposed to opening a new TCP connection forevery request/response pair.

There are several advantages to using persistent connections:

- Less network traffic: Fewer TCP connections need to be set up and taken down.

- Reduced latency on subsequent requests: The initial TCP handshake is done once at the beginning of the single persisted connection instead of being done for several non-persistent connections.

- TCP works more smoothly: Longer lasting connections allow TCP sufficient time to determine the congestion state of the network and react appropriately.

Using persistent connections to group requests not only improves network performance, but it is also a more efficient use of radio resources. By grouping requests together, the radio is turned on once for a whole group of requests, rather than being turned on for each individual request. This improves performance and reduces energy waste.

For example, if an application uses analytics, it may open a separate connection just to send analytics requests. However, if the analytics request is bundled with a keep-alive request into one connection, it would improve application performance and be a more efficient use of radio resources.

To use persistent connections effectively, it is important to understand how requests/responses can be managed when using HTTP on a TCP connection.

TCP is a stream based protocol. In order to reuse an existing connection, the HTTP protocol has to have a way to indicate the end of the previous response and the beginning of the next one. Therefore, the HTTP protocol requires that all messages on the connection must have a self-defined message length (one not defined by the closure of the connection). This self-demarcation of the message is achieved by either setting the Content-Length header, or in the case of a chunked transfer encoded entity body, each chunk starts with a size, and the response body ends with a special last chunk. In addition to defining a message length, a connection can be ended at any time by sending a packet with a FIN request.

In HTTP 1.1, persistent connections are the default behavior of any connection. So unless otherwise indicated, the client should assume that the server will maintain a persistent connection, even after receiving error responses from the server. However, the protocol provides the means for a client and a server to explicitly signal the closing of a TCP connection. This means that even though persistent connections are the default, the number of simultaneous connections can be controlled by closing connections when they are no longer needed.

By carefully managing the multiple TCP persistent connections in your application to keep the number of simultaneous connections to a reasonable level, you can improve the performance of you application, save energy, and reduce bandwidth.

HTTP Pipelining

Another technique for improving application performance is HTTP pipelining. If an application is running on a client that supports persistent connections, it can "pipeline" its requests.

Pipelining is the technique of sending multiple requests without waiting for each response.

Because a server must send its responses to requests in the same order that the requests are received, it is not necessary to wait for each response before sending the next request. By sending multiple requests through the HTTP pipeline, instead of one at a time, an application appears faster to the user.